08. (Optional) Challenge: Play Soccer

(Optional) Challenge: Play Soccer

After you have successfully completed the project, you might like to solve a more difficult environment, where the goal is to train a small team of agents to play soccer.

You can read more about this environment in the ML-Agents GitHub here .

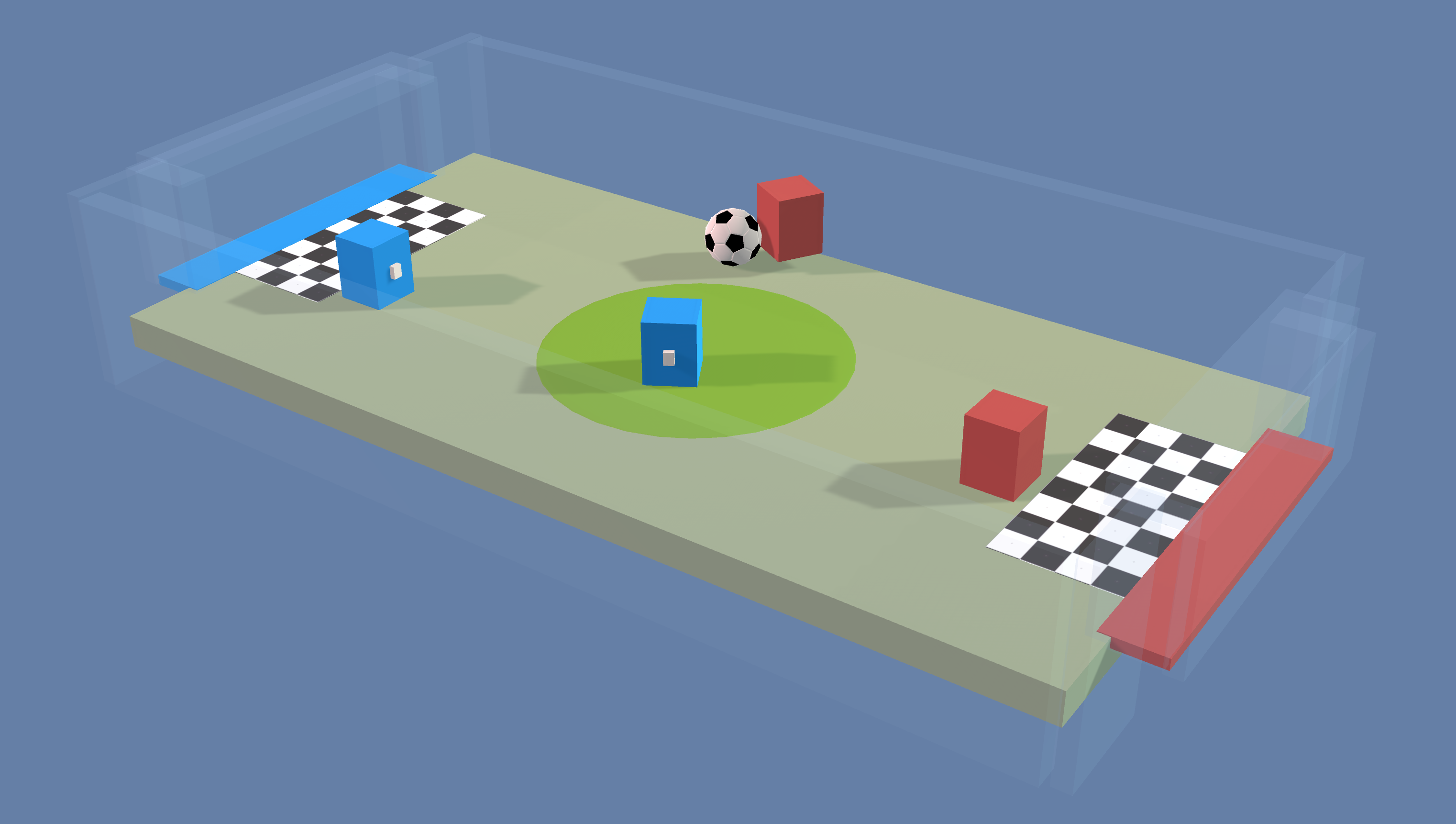

ML-Agents Soccer Environment

## Download the Unity Environment

To solve this harder task, you'll need to download a new Unity environment. You need only select the environment that matches your operating system:

- Linux: click here

- Mac OSX: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

Then, place the file in the

p3_collab-compet/

folder in the DRLND GitHub repository, and unzip (or decompress) the file.

Please do not submit a project with this new environment. You are required to complete the project with the Tennis environment that was provided earlier in this lesson, in The Environment - Explore .

( For AWS ) If you'd like to train the agents on AWS (and have not enabled a virtual screen ), then please use this link to obtain the "headless" version of the environment. You will not be able to watch the agents without enabling a virtual screen, but you will be able to train the agents. ( To watch the agents, you should follow the instructions to enable a virtual screen , and then download the environment for the Linux operating system above. )

## Explore the Environment

After you have followed the instructions above, open

Soccer.ipynb

(located in the

p3_collab-compet/

folder in the DRLND GitHub repository) and follow the instructions to learn how to use the Python API to control the agent.